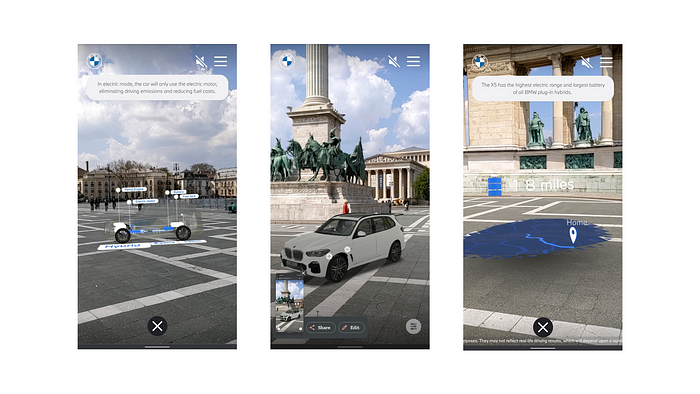

BMW Virtual Viewer is an augmented reality web application accompanied with an audible chatbot.

In this article we are going to explore the journey, the main content provider, which dictates the flow, the sequence of interactions the user can engage in. Then discuss augmented reality integration.

Journey

Journey can mean a travel from point a to point b. User journey as a term means the user’s experiences when interacting with the application. The chatbot guides the user through the experience, as such, she takes the user on a journey.

.. alright alright, what is the point? The journey — in the context of this application — simply means a sequence of events with some pauses between. After the tutorial has finished and the model is placed in the 3D/real world the chatbot starts small and mentions for example that the radio can be turned on by asking her to do so. Or that the doors can be opened. After around 20 seconds — when the user had enough time to explore the model — a new event happens. The personalisation panel appears, and — as expected — the chatbot explains what it is all about.

As such, the journey is the arch of the application, which dictates what is happening if the user hasn’t started something by herself.

Consistency

Obviously the chatbot shouldn’t start explaining a different topic while another interaction is undergoing. Since ultimately the app decides when and what is played, the app is the sole responsible party for the chatbot’s audio lines. The journey should not continue only when there is nothing else happening. Thus there are many conditions that pause the journey flow such as

- audio is playing

- user is recording a command

- some grace period after a command has been recorded

- animation is playing

- window is out of focus

- waiting for server response

… and many more.

Interactions

There are multiple types of interactions that the journey, or the user herself can trigger:

- audio

- audio + Call-to-action button

- audio + Confirmation panel (do you want to hear more about …? yes/no)

- when confirmed, an animation + audio description starts

- audio + Quiz

- audio + Information panel

Some components — like most of the call-to-action external link buttons — are tied to their corresponding intent (more on this in the upcoming article), so they are visible while the audio of the intent is being played/”said”(?). Others — like confirmation dialogs — are visible for a given amount of time.

Let’s steer into another reality! Although the augmentation is pretty static, it is mainly breathtaking because of how detailed the model is. But how is that integrated?

WebGL

Vue is only showing a few buttons and boxes, we cannot discuss the frontend without talking about the main purpose of the application, being able to see a fine detailed BMW model in augmented reality.

Architecture-wise this is nicely separated, it has its own module. The guys over Antiloop were creating this aspect of the application.

Integration

It is a separate project, whose bundle.js is imported in the main index.html. This defines a class called ARManageron the windowobject. To get going, this class has to be instantiated. It’s constructor receives an html element which will be the “canvas” for arManager. From then on, the instantiated object can be used to transfer information from and to the augmented reality.

Conventionally, “passing data in” — like requesting to load a given model, requesting the start of an animation, changing the color of the model etc — is done by function calls. The other way of the information flow — like getting informed about the user entering/leaving the model, animation lifecycle events, etc — is happening by function callbacks. All this is done by a separate Vue store module, in which actions are mapped to arManager function calls.

For arManager to work properly, the camera permissions must be granted. On the web this is done through the navigator.mediaDevices.getUserMedia function call. The application has to do this, as 8thwall only notifies that the permission has to be given.

Model interaction

There are several ways the user can interact with the model.

- Tapping on the door opens/closes it

- Tapping on a hotspot opens an information panel and the chatbot explains that feat

- Ask the chatbot to turn on the lights, and she will do so

- Simply by being in certain positions related to the model will trigger some chatbot explanation. For example if the user is at front, the kidney grille will be mentioned.

To be continued!

The first part of the series can be found here. This article originally appeared on our Medium blog.

Have a look at our social media